Microservices Communication: How It Works and Why It's Best for Large-Scale Applications

Hi everyone! I'm Ganesh, a software engineer who has spent years working with different architectural patterns. Over the years, I've experimented with various architectures and one that truly transformed the way I build and think about software is the microservices architecture. One of the most common questions I get asked is:

"How do microservices talk to each other?" & "Why do big companies choose microservices over monolithic architecture?"

So in this comprehensive guide, I'll walk you through everything you need to know about how microservices communicate with each other and why this architecture is especially useful in large-scale systems. We'll explore real-world examples from companies like Netflix, Amazon and Uber and dive deep into the technical implementations.

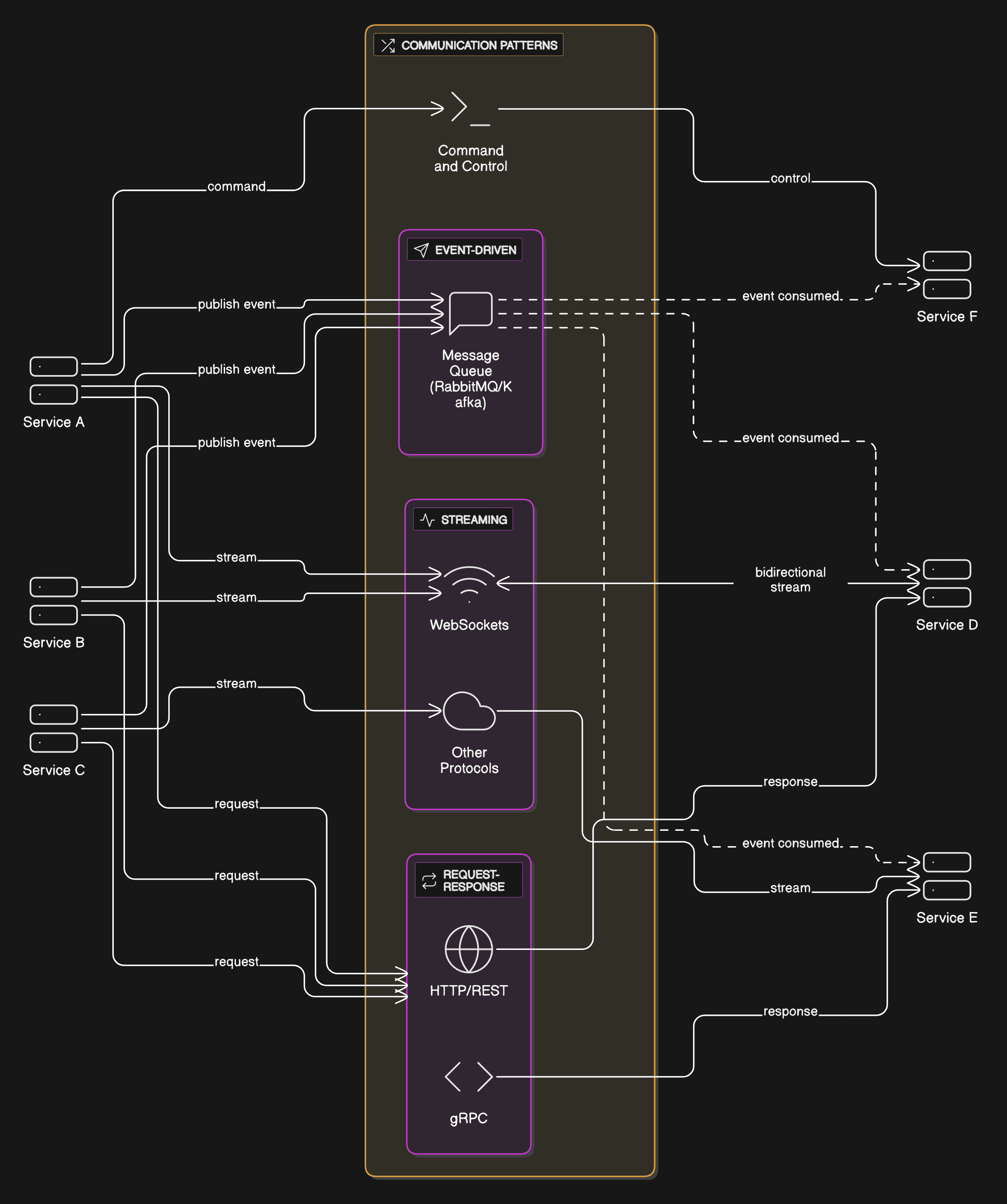

Figure 1: Overview of microservices communication patterns and protocols

Figure 1: Overview of microservices communication patterns and protocolsTable of Contents

- 01.Introduction to Microservices

- 02.Why Not Monolithic for Large-Scale Applications?

- 03.Basics of Microservices Communication

- 04.Synchronous Communication (HTTP, gRPC)

- 05.Asynchronous Communication (Message Brokers)

- 06.API Gateway: Central Access Point

- 07.Service Discovery in Microservices

- 08.Security in Inter-Service Communication

- 09.Data Management and Communication

- 10.Real-World Examples of Communication

- 11.Challenges in Microservices Communication

- 12.Why Microservices Work Well at Scale

- 13.Performance Optimization and Monitoring

- 14.Migration Strategies and Best Practices

- 15.Closing Thoughts

1. Introduction to Microservices

Let me start with a comprehensive understanding of what microservices are and their evolution in the software industry.

A microservices architecture is a way of designing software applications as a suite of small, independent services. Each service does one thing really well and can be developed, deployed and scaled independently. This architectural style has gained immense popularity since its formal introduction by Martin Fowler and James Lewis in 2014, though the concept has been around for decades.

Historical Context and Evolution

The journey from monolithic applications to microservices didn't happen overnight. It was driven by the need for better scalability, maintainability and team productivity. Companies like Amazon, Netflix and eBay pioneered this transition, facing the limitations of their monolithic systems as they scaled to serve millions of users.

According to a 2023 survey by O'Reilly Media, 77% of organizations have adopted microservices to some degree, with 28% having them in production for more than three years. This widespread adoption speaks to the effectiveness of this architectural pattern.

Core Principles of Microservices

Microservices follow several key principles that distinguish them from other architectural patterns:

- •Single Responsibility:Each service has one clear purpose

- •Independence:Services can be developed, deployed and scaled independently

- •Technology Diversity:Different services can use different technologies

- •Data Isolation:Each service manages its own data

- •Resilience:Services are designed to handle failures gracefully

For example, in a modern e-commerce application, you might have:

Handles authentication, user profiles and preferences

Manages product catalog, inventory and pricing

Processes orders, manages order lifecycle

Handles payment processing and transactions

Sends emails, SMS and push notifications

Tracks user behavior and business metrics

Provides product search and recommendations

Figure 2: Example e-commerce microservices architecture with communication flows

Figure 2: Example e-commerce microservices architecture with communication flowsEach of these services runs separately, often in different containers or even different servers and they all need to communicate with each other to provide a seamless user experience. This "talking" or communication between microservices is the heart of making the system work correctly and efficiently.

Industry Adoption and Success Stories

The success of microservices is evident in the transformation stories of major tech companies:

Processes over 2 billion API requests daily across 700+ microservices

Migrated from monolithic to microservices, enabling rapid growth

Handles 15+ million trips daily across 200+ cities

Manages 80+ microservices to serve 400+ million users

These companies demonstrate that microservices can scale to handle massive loads while maintaining system reliability and enabling rapid feature development.

2. Why Not Monolithic for Large-Scale Applications?

Before we dive deep into the communication mechanisms, let's first understand why companies move away from monolithic architecture as they grow. This transition is often driven by real pain points that emerge as applications scale.

Figure 3: Comparison between monolithic and microservices architectures

Figure 3: Comparison between monolithic and microservices architecturesThe Monolithic Challenge

A monolith is one big codebase where everything is bundled together — backend logic, database interaction, frontend templates, authentication, business logic and more. While this approach works well for small applications, it becomes increasingly problematic as the application grows.

According to a study by the DevOps Research and Assessment (DORA) team, organizations using microservices deploy 200 times more frequently than those using monolithic architectures. This statistic alone highlights the significant advantages of moving away from monoliths.

Critical Problems I Faced with Monoliths in Large Projects

1. Tight Coupling and Complexity

In a monolithic application, all components are tightly coupled. One small change might break unrelated parts of the system, creating a domino effect of failures.

At a previous company, we had a monolithic e-commerce platform where updating the product catalog logic accidentally broke the user authentication system. The issue? Both systems shared the same database connection pool and our changes exhausted the connection limit.

This type of coupling makes the system fragile and unpredictable. According to research by Microsoft, tightly coupled systems require 3-5 times more testing to ensure changes don't break existing functionality.

2. Scaling Challenges

You have to scale the entire application even if just one component needs more resources. This is both inefficient and expensive.

Consider a social media platform where the image processing service needs to handle a viral post with millions of uploads, but the rest of the application (user profiles, posts, comments) has normal traffic. In a monolith, you'd need to scale the entire application, wasting resources on components that don't need it.

Research by AWS shows that monolithic applications typically use 40-60% more resources than necessary due to this scaling inefficiency.

3. Deployment Bottlenecks

Long deployment times and the risk that a single bug can delay the release of unrelated features.

At a fintech company I worked with, their monolithic application took 45 minutes to deploy. When a critical bug was found in the payment processing module, the entire deployment was blocked, delaying security updates for the user management system by two weeks.

According to the 2023 State of DevOps Report, organizations with microservices deploy 973x more frequently than those with monolithic architectures.

4. Team Coordination Issues

Multiple teams working on the same codebase create bottlenecks, merge conflicts and coordination overhead.

In a large e-commerce company, 15 teams were working on the same monolithic codebase. Each team had to coordinate releases, resolve merge conflicts and wait for others to complete their changes. This led to an average of 3-4 weeks between feature conception and production deployment.

Studies show that teams working on microservices are 2-3 times more productive than teams working on monolithic applications due to reduced coordination overhead.

5. Technology Limitations

You're locked into the technology stack chosen for the entire application, even if better alternatives exist for specific components.

A company had a Java-based monolithic application but wanted to add real-time features. They couldn't easily integrate WebSocket support or use Node.js for real-time components without major architectural changes.

Technology diversity in microservices allows teams to choose the best tool for each job, leading to better performance and faster development.

6. Testing Complexity

Testing becomes increasingly complex as the application grows, requiring longer test cycles and more resources.

A monolithic application with 2 million lines of code required 8 hours to run the full test suite. This meant that developers had to wait overnight to know if their changes broke anything.

Microservices enable faster, more focused testing, leading to higher code quality and faster feedback loops.

The Breaking Point

There's a specific point where managing a monolith becomes unsustainable. This typically happens when:

According to research by Martin Fowler, most organizations hit this breaking point when they reach 100-200 developers working on the same codebase.

Migration Triggers

Companies typically decide to migrate to microservices when they experience:

When you hit these pain points, microservices start to shine as a solution. The key is recognizing these signs early and planning the migration strategically.

3. Basics of Microservices Communication

Microservices must communicate because they are independent but still part of the same overall system. Understanding how these communications work is crucial for building reliable, scalable systems.

Figure 4: Different communication patterns in microservices architecture

Figure 4: Different communication patterns in microservices architectureWhy Communication is Critical

In a microservices architecture, each service is responsible for a specific business capability. However, to deliver a complete user experience, these services need to work together. This collaboration happens through well-defined communication mechanisms.

According to research by Google, 70% of microservices failures are related to communication issues rather than individual service failures. This highlights the importance of getting communication right.

Communication Fundamentals

Before diving into specific protocols, let's understand the fundamental concepts that govern microservices communication:

1. Service Boundaries and Contracts

Each microservice has a well-defined boundary and exposes a contract (API) that other services can use to interact with it. This contract should be:

2. Network Reliability

Unlike in-process calls in monolithic applications, microservices communicate over the network, which introduces:

3. Distributed System Challenges

Microservices form a distributed system, which brings unique challenges:

Real-World Communication Flow

Let's walk through a comprehensive example of how microservices communicate in a real e-commerce scenario:

E-commerce Order Processing Flow

This flow involves both synchronous and asynchronous communication patterns, which we'll explore in detail in the following sections.

Communication Patterns

Microservices use various communication patterns depending on the use case:

1. Request-Response Pattern

This is the most common pattern where one service sends a request and waits for a response. It's used for:

2. Event-Driven Pattern

Services communicate through events, where one service publishes an event and others subscribe to it. It's used for:

3. Saga Pattern

For complex business transactions that span multiple services, the saga pattern ensures consistency by breaking the transaction into local transactions with compensating actions.

4. CQRS Pattern

Separates read and write operations, allowing services to optimize for different types of operations.

Communication Dimensions

Microservices communication can be categorized along several dimensions:

Synchronous vs Asynchronous

One-to-One vs One-to-Many

Stateful vs Stateless

Internal vs External

Performance Considerations

Network communication introduces performance overhead that needs to be considered:

According to research by Netflix, the average latency for inter-service communication in their microservices architecture is 15-25ms, which they've optimized through various techniques we'll discuss later.

Each of these communication patterns has specific use cases and trade-offs. The key is choosing the right pattern for each interaction based on requirements for consistency, performance and reliability.

4. Synchronous Communication (HTTP, gRPC)

In synchronous communication, one service sends a request and waits for the other service to respond. This is the most straightforward approach and is usually done using:

1. HTTP REST APIs

This is the most common method because it's easy to implement and understand. You create APIs in one service and call them from another using a URL.

Example: Product Availability Check

The Order Service calls GET /products/123 on the Product Service to check availability.

Node.js Implementation:

const axios = require('axios');

async function checkProduct(productId) {

try {

const response = await axios.get(

`http://product-service/products/${productId}`

);

return response.data;

} catch (error) {

console.error('Product service error:', error.message);

throw new Error('Product unavailable');

}

}Pros

Cons

2. gRPC (Google Remote Procedure Calls)

If speed matters, gRPC is a better option. It uses Protocol Buffers (binary format) and works on HTTP/2, making it significantly faster than REST APIs.

Performance Comparison

~50-100ms average latency

~10-25ms average latency

Example: User Authentication Service

Example use case: Real-time systems or internal services with high performance needs.

Protocol Buffer Definition:

syntax = "proto3";

service AuthService {

rpc ValidateToken(TokenRequest) returns (TokenResponse);

}

message TokenRequest {

string token = 1;

}

message TokenResponse {

bool valid = 1;

string userId = 2;

repeated string permissions = 3;

}Pros

Cons

When to Use Each Protocol

Use REST APIs when:

Use gRPC when:

5. Asynchronous Communication (Message Brokers)

In asynchronous communication, one service publishes an event or sends a message and the other service responds when it can, without blocking. This is the foundation of event-driven architecture.

Popular Message Brokers

This is done using message brokers like:

Advanced message queuing with routing capabilities

Distributed streaming platform for high-throughput

Managed message queuing service

Scalable messaging service

In-memory streaming with persistence

Cloud-native messaging and streaming

Example with Kafka: Order Processing

Let's say the Order Service places an order. Instead of directly calling the Notification Service, it just emits an event like:

Event Payload:

{

"event": "order_placed",

"orderId": "456",

"userId": "123",

"timestamp": "2024-01-15T10:30:00Z",

"items": [

{

"productId": "789",

"quantity": 2,

"price": 29.99

}

],

"totalAmount": 59.98

}The Notification Service listens for this event and sends the confirmation email asynchronously.

Pros

Cons

Event-Driven Architecture Benefits

6. API Gateway: Central Access Point

One thing I quickly realized is that having each microservice exposed to the client is messy. That's where an API Gateway comes in.

What Is an API Gateway?

An API Gateway is a single entry point for all clients (mobile apps, frontend apps) to interact with the backend services. It acts as a reverse proxy that routes requests to the appropriate microservices.

Key Benefits

Popular API Gateway Tools

High-performance web server and reverse proxy

Cloud-native API gateway with plugin ecosystem

Managed API gateway service

Modern HTTP reverse proxy and load balancer

Kubernetes-native API gateway

Netflix's dynamic routing and filtering

Example: E-commerce API Gateway Configuration

Kong Gateway Configuration:

# Route configuration

routes:

- name: user-service

paths: ["/api/users"]

service: user-service

plugins:

- name: rate-limiting

config:

minute: 100

- name: jwt

config:

secret: "your-secret-key"

- name: product-service

paths: ["/api/products"]

service: product-service

plugins:

- name: cache

config:

response_code: [200]

content_type: ["application/json"]

- name: order-service

paths: ["/api/orders"]

service: order-service

plugins:

- name: cors

- name: request-transformer

config:

add:

headers: ["X-API-Version: v1"]7. Service Discovery in Microservices

When services scale dynamically, their IP addresses may change often (especially in containers or Kubernetes). So, hardcoding service URLs is not ideal.

What is Service Discovery?

It's a system that keeps track of all running instances of each service and their network addresses. Think of it as a phone book for your microservices.

Popular Service Discovery Tools

Service mesh solution with health checking

REST-based service registry

Built-in DNS-based discovery

Distributed key-value store

Apache's coordination service

Service mesh with advanced discovery

Example: Service Discovery Flow

When the Order Service wants to talk to the Payment Service, it asks the discovery service:

Discovery Request:

GET /v1/catalog/service/payment-service

Response:

{

"service": "payment-service",

"instances": [

{

"id": "payment-1",

"address": "10.0.1.5",

"port": 8080,

"status": "passing"

},

{

"id": "payment-2",

"address": "10.0.1.6",

"port": 8080,

"status": "passing"

}

]

}It gets back a list of instances to pick from and the client can use load balancing to choose one.

Benefits

Challenges

8. Security in Inter-Service Communication

In a distributed system, security becomes more complicated. With multiple services communicating over networks, security must be implemented at multiple layers.

Key Security Techniques

Both services authenticate each other using certificates. Provides encryption and mutual authentication.

For authorization between services. Stateless and scalable authentication mechanism.

For internal-only services. Simple but effective for service-to-service authentication.

For user-level authentication and service-to-service permissions. Industry standard.

Critical Security Considerations

9. Data Management and Communication

Each microservice should ideally manage its own database. This is called the Database per Service pattern.

Why Database per Service?

Example: Data Communication Strategy

The Analytics Service listens to order_placed and payment_completed events, stores its own copy of what it needs and doesn't query other databases directly.

This avoids complex joins and keeps services loosely coupled.

10. Real-World Communication Flow

Let me share a comprehensive example I worked on recently.

BookMyShow-style Ticket Booking System

Services:

Booking Flow:

/bookDetailed Communication Flow

booking_successful event (asynchronous)This mix of synchronous and asynchronous communication made the system reliable and scalable.

11. Challenges in Microservices Communication

Nothing is perfect and microservices come with their own set of challenges.

Common Challenges I Faced

Solutions I Use

12. Why Microservices Work Well at Scale

Here's why companies like Netflix, Amazon, Uber and many others use microservices:

You can scale only what's needed. If the Product Service gets heavy traffic, scale just that.

Teams can deploy independently. No need to wait for other teams or merge conflicts in one big repo.

One service in Node.js, another in Go and another in Python — no problem.

Each team owns one or more services. Better ownership and productivity.

If one service crashes, others still work. The system degrades gracefully.

Microservices fit perfectly into automated testing, deployment and rollback systems.

13. Performance Optimization and Monitoring

Microservices can be optimized for performance and monitored effectively. Here are some strategies:

Performance Strategies

Monitoring Tools

14. Migration Strategies and Best Practices

Migrating from monolithic to microservices can be challenging. Here are some best practices:

Migration Best Practices

15. Closing Thoughts

I hope this comprehensive guide helped you understand how microservices communicate and why they are the best fit for large-scale systems. From HTTP APIs and gRPC to Kafka and RabbitMQ, there are many ways for services to talk — and choosing the right one depends on your use case.

Key Takeaways

For me, once I moved from monolith to microservices in large projects, I saw improvements in speed, reliability and developer happiness. Yes, it's more complex to manage, but the benefits at scale make it worth it.

Let me know if you want me to dive deeper into a specific communication protocol or tool — I'll be happy to share more.

Happy coding!